AI Executive Order: Key Takeaways

Implications and opportunities from the President's sweeping policy action.

The Biden Administration’s recent executive order puts the White House in the driver’s seat of AI policy management and directs the sprawling executive branch to complete studies and develop industry guidance. The most specific requirements of the EO fall on those few firms training “dual-use foundation models” or deploying massive compute clusters. However, the EO does suggest some areas where a broader set of firms should engage. Read on for KSG’s summary and key takeaways.

The Biden Administration issued a wide-ranging executive order on AI on October 30, 2023. The EO is intended to chart a path for regulatory policy on “dual-use foundation” AI models. The EO covers broad themes of testing, evaluation, security, and societal impact (bias and economic) and establishes a set of definitions to focus risk management and policy efforts.

Like many EOs, the order is both a policy announcement and a starting gun—it puts the WH in the driver’s seat of AI policy management and directs the sprawling executive branch to complete studies and reports, as well as develop industry guidance and standards over the next six to twelve months.

The apparent top safety and security risks motivating this document are:

Proliferation risks to biosecurity given a lowering technological and know-how barrier to entry;

Geopolitical risks to national technological advantage from foreign person use of advanced computing;

Cybersecurity risks to critical infrastructure from insecure application integrations or increasing vulnerability exploitation capabilities; and

Disinformation risks to democratic systems from synthetic media, deep fakes, and AI-enabled foreign influence operations.

Below, we draw attention to some key aspects of the 110-page document that intersect with cybersecurity, national security, and the direction of travel for AI regulation. The key items most relevant to the cybersecurity industry are:

Cybersecurity requirements on dual-use model developers (#1 below)

Expansion of programs on automated vulnerability discovery and mitigation (#6)

Development of AI Risk Management frameworks for critical infrastructure (#3)

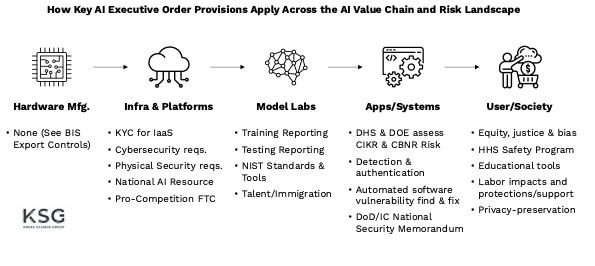

Note that the AI value chain is quite complex, and these EO provisions apply to different parts of the risk landscape:

Implications and Opportunities

The most specific requirements of the EO fall on those few firms training frontier large-language models or deploying massive compute clusters. However, the EO does suggest some areas where a broader set of firms should engage:

USG contractors and vendors should pay close attention to OMB, GSA, and other government procurement guidance, in particular changes to the FedRAMP program.

AI application and tool developers should look to participate in government pilot programs (e.g., on automated vulnerability solutions) and respond to agency announcements or RFIs to support synthetic media detection & authentication tools as well as privacy-preserving technologies.

Cybersecurity and industry experts should engage with NIST and CISA (as well as DOE, where relevant) to suggest useful frameworks, methodologies, or approaches to manage AI Risk across critical infrastructure sectors.

Employers should consider forthcoming AI talent and immigration policy proposals.

The EO will drive several specific positive policy initiatives, including:

Establishing the National AI Resource, a $140M effort to promote broader and open AI development outside of current dominant incumbents;

Easing visa requirements for skilled AI labor, ensuring the US can compete in the global talent race;

Support US international leadership in forming global regulatory and risk management frameworks that align to democratic values; and

Establish important guardrails in key areas where risks and opportunities from AI systems are each magnified, including healthcare, drug discovery, synthetic biology, cybersecurity, critical infrastructure protection, and criminal justice.

Key Takeaways

#1: Reporting Requirements are Based on Size of Models and Their Capabilities

The EO defines a "dual-use foundation model" as an “AI model that is trained on broad data, generally uses self-supervision, contains at least tens of billions of parameters, is applicable across a wide range of contexts, and that exhibits, or could be easily modified to exhibit, high levels of performance at tasks that pose a serious risk to security, national economic security, national public health or safety, or any combination of those matters.”

In particular, the EO is focused on models that:

Substantially lower the barrier of entry for non-experts to design, synthesize, acquire, or use chemical, biological, radiological, or nuclear (CBRN) weapons;

Enable powerful offensive cyber operations through automated vulnerability discovery and exploitation against a wide range of potential targets of cyber-attacks; or

Permit the evasion of human control or oversight through means of deception or obfuscation.

Reporting requirements apply to large computing clusters and models trained using a quantity of computing power just above the current state of the art and at the level of ~25-50K clusters of H100 GPUs. These parameters can change at the discretion of the Commerce Secretary, but the specified size and interconnection measures are intended to bring only the most advanced “frontier” models into the scope of future reporting and risk assessment.

It reflects an acute concern that the exponential progress of GPT-like models could lead to a strategic technological surprise and potential runaway capabilities. KSG assesses that Commerce may bring these limits down in the next two years, as few firms are able to procure extreme computational capacity. Experts note that increase compute power is a trend likely slow in the coming years.

#2: Frontier Model Developers Must Report Training and Safety Test Results to USG

Models that meet the above requirements will be subject to increased reporting requirements through the Secretary of Commerce. The EO leans on the Defense Production Act for the authority to require this reporting. Under Title VII (Sec. 705) the Commerce Secretary may (via delegated Presidential authority) “by regulation, subpoena, or otherwise obtain such information from any person as may be necessary or appropriate, in his discretion, to the enforcement or the administration of this act [DPA]” to support industrial base assessments. This is a somewhat novel use of this authority and may lead to legal challenges.

Firms developing such covered models must notify the USG when training their model and must share the results of all “red-team” or adversarial safety tests. Specifically, these firms must report:

“(A) the physical and cybersecurity protections taken to assure the integrity of the training process against sophisticated threats;

(B) the ownership and possession of the model weights of any dual-use foundation models, and the physical and cybersecurity measures taken to protect those model weights; and

(C) the results of any developed dual-use foundation model's performance in relevant AI red-team testing guidance developed by NIST… and a description of any associated measures the company has taken to meet safety objectives, such as mitigations to improve performance on these red-team tests and strengthen overall model security.”

This provision will drive demand for more defensible and rigorous threat assessments and cybersecurity protections by AI foundation model developers. Notably, this requirement does not force model developers to disclose training data, which often contributes to bias and security risks through malicious acts such as data poisoning.

This omission is likely due to the DPA’s lack of authority for that degree of transparency. Proposed legislation in the US and the AI Act in the EU require the disclosure and evaluation of some model data.

#3: NIST Will Lead Development of AI Risk Management Guidance and Standards

The report directs the National Institute of Standards and Technology (NIST) to develop industry standards for safe, secure, and trustworthy AI systems. NIST will create three resources within 270 days:

A generative AI risk management framework intended to accompany NIST’s AI Risk Management Framework;

A framework to apply secure development practices to generative AI and dual-use foundation models; and

An initiative to create guidance and benchmarks for evaluating and auditing AI capabilities. This will focus on capabilities through which AI could cause harm, such as cybersecurity and biosecurity.

NIST is the appropriate organization to lead this standard setting as their best practices already inform safety in AI with their AI Risk Management Framework and in other relevant fields such as cybersecurity.

#4: Know Your Customer Rules Will Apply for Infrastructure as a Service (IaaS)

The Executive Order encourages regulation to increase transparency around foreign usage and reselling of large AI models with the potential to aid malicious-cyber activity. In the next 90 days, the Secretary of Commerce will propose regulation that:

Requires that U.S. IaaS providers disclose to the Secretary of Commerce when a foreign person transacts with that IaaS Provider to train a large AI model with potential capabilities that could be used in malicious cyber-enabled activity (a "training run").

Requires that U.S. IaaS-provider foreign resellers disclose to the Secretary of Commerce when a foreign person buys and uses their product (“IaaS Providers prohibit foreign resellers of their services from provisioning their services unless they submit to the U.S. IaaS Provider a report for the Secretary of Commerce when a foreign person transacts with that reseller to use a U.S. IaaS product to conduct a training run…”).

Know Your Customer (KYC) rules will apply specifically in the cases of foreign entities using large AI models from US-based companies and their foreign resellers. Previously, Executive Order 13984 called for the Secretary of Commerce to propose regulation on identity verification for foreign individuals who obtain accounts with US-based IaaS providers.

Commerce issued an advanced notice of proposed rulemaking on this in September 2021. While there has not been a public announcement since, Commerce is putting the pieces in place for “KYC for the Cloud.”

#5: DHS and DOE Will Assess Critical Infrastructure, Biosecurity, and CBRN Risks

The Executive Order outlines a plan for DHS and DOE to evaluate and then recommend mitigations for key AI safety risks in critical infrastructure.

First, within 90 days, the Cybersecurity and Infrastructure Agency (CISA) will coordinate across agencies to assess risks of using AI in critical infrastructure. Risk assessments will focus on ways in which deploying AI may make critical infrastructure more vulnerable to critical failures, physical, and cyber-attacks.

Within 180 days, the Secretary of Homeland Security will work with other regulators to incorporate NIST’s AI Risk Management Framework (NIST AI 100-1) into relevant safety guidelines for critical infrastructure. They will also establish an Artificial Intelligence Safety and Security Advisory Committee.

Additionally, within 180 days, the Secretary of Homeland Security and Director of the Office of Science and Technology Policy will evaluate AI threats in chemical, biological, radiological, and nuclear (CBRN) threats. This will include potential applications of AI to counter these threats.

CISA’s expertise and relationship with the private sector position it well to play a coordinating role in these efforts.

The timeline in the Executive Order indicates an intentional order of operations that connect an initial evaluation of risks with subsequent recommendations.

#6: DoD and DHS Will Run a Pilot Project on Automated Software Vulnerability Detection and Mitigation

Within 180 days, the Secretary of Defense and Secretary of Homeland Security will complete a pilot project to use AI capabilities, such as large language models, to automatically detect and remediate vulnerabilities in critical US government systems.

As companies explore the use of AI models for cybersecurity tools, government agencies will focus on similar tooling for US networks. This reduces national security risks associated with using large language models with sensitive data.

While government cybersecurity capabilities have historically been cutting edge, it remains to be seen if government agencies can implement these systems as efficiently as private companies given private sector-led development in this space.

#7: Commerce Owes a Report on Detection and Authentication Techniques for Synthetic Media

The Secretary of Commerce has 240 days to publish a report on detecting and authenticating synthetic media with an emphasis on:

(A) Authenticating content and tracking its provenance;

(B) Labeling synthetic content, such as using watermarking;

(C) Detecting synthetic content;

(D) Prevent generative AI from producing Child Sexual Abuse Material (CSAM) or nonconsensual intimate imagery of real individuals);

(E) Software for the above purposes; and

(F) Auditing and maintaining synthetic content.

The Secretary of Commerce will subsequently work with the Director of the Office of Management and Budget to provide and periodically update guidance on existing tools and practices for digital content authentication and synthetic content detection measures.

Synthetic media detection is a pressing issue, especially with the upcoming presidential election. Already, candidates have used AI in political advertisements to boost their image and generate speech from opposing candidates. Granted, malicious actors are unlikely to follow ‘opt in’ guidelines, but Commerce’s report on synthetic media detection may act as the basis for further policies and enforcement mechanisms against entities that fail to abide by the rules.

#8: Commerce Will Issue Guidelines for Privacy-Preserving Tech and Techniques

In response to growing concerns about data privacy, the Executive Order also calls for guidelines about privacy preservation in AI. These focus on differential-privacy, a technique in which noise is added to a dataset to protect the privacy of individuals while still allowing for analysis and model training.

NIST will create guidelines for agencies to evaluate the efficacy of differential-privacy-guarantee protections, including for AI. The guidelines will describe types of differential-privacy safeguards and common risks to realizing differential privacy in practice.

Conclusion

While this EO represents the most official policy action by the U.S. thus far on AI regulation, others are moving ahead as well. The UK hosted global leaders (including a Vice Minister from China) and tech executives in Bletchley Park this week, to build an international consensus on AI safety. The EU is also pushing to pass its own AI Act by the end of the year.

This White House action is quite ambitious and sweeping in scope. It sets aggressive timelines for sprawling branches of the Executive to coordinate, assess, and issue policy on a complex and rapidly evolving technology domain.

Meanwhile, the pace of frontier model capabilities, application development and global adoption is unlikely to slow. This genie is out of the bottle and policymakers will continue to play catch up to try to contain potential risks.